IPv4

From $0.70 for 1 pc. 40 countries to choose from, rental period from 7 days.

IPv4

From $0.70 for 1 pc. 40 countries to choose from, rental period from 7 days.

IPv4

From $0.70 for 1 pc. 40 countries to choose from, rental period from 7 days.

IPv6

From $0.07 for 1 pc. 14 countries to choose from, rental period from 7 days.

ISP

From $1.35 for 1 pc. 21 countries to choose from, rental period from 7 days.

Mobile

From $14 for 1 pc. 14 countries to choose from, rental period from 2 days.

Resident

From $0.90 for 1 GB. 200+ countries to choose from, rental period from 30 days.

Use cases:

Use cases:

Tools:

Company:

About Us:

ParseHub is another web scraping tool, this one is of the freemium type, which allows website users to extract data by simply clicking on the web element that needs to be targeted. It has a point-and-click interface that boasts of requiring no coding knowledge, no long training sessions, and not much complex bot setup. This review will analyze the main features of ParseHub, such as the user interface, available plans, and the way to set up proxy servers in case one is needed.

The software is a desktop application working on Windows, Mac, and Linux available to download on their website. The first step in using ParseHub is to enter the URL of the website containing the required data. Then the user picks the subjects he needs to focus on with the use of selectors. With the help of artificial intelligence, the program generated by ParseHub studies the construction of a certain page in order to reproduce it further on other pages and websites. In the last step, the user simply visits specified pages, downloads data/files, and organizes them in a way that is easy to work with.

ParseHub enables users to highlight misplaced elements on the web pages in order to use them for filling out forms and images. One can create algorithms for collecting images and data by simply moving the designations to the work area of the application where they will be transformed into selectors. Because of this graphical interface approach, programming is unnecessary, which is a great advantage for people who have never programmed before when using ParseHub.

The platform is capable of quickly and effectively obtaining information from web pages that have been created using embedded Java script or other interactive objects. These consist of activities such as clicking buttons, performing scrolls, opening context menus, playing media, collapsible comment sections, filling out forms, dropdown menus, Ajax, Javascript, etc.

The service gives one the option to either use the traditional built-in methods of data gathering or cloud-based built-in methods. For instance, they can facilitate the storage of the gathered information in Dropbox cloud or AWS S3. This enables easy data management, team sharing, analysis, and multi-tasking by eliminating the hassle of information processing activities.

Also, ParseHub is quite famous for the option of downloading images from websites. Easy to configure and operate, the program allows the processing of images and pages in batch mode with their subsequent saving to the hard disk or to the cloud, which makes it an excellent tool for tasks in image collection that involve web scraping.

It is possible to integrate with reputable proxy providers and avoid being detected when using ParseHub and this is important since it allows users to use multiple IPs during scraping. This feature is important especially when a scraping job requires accessing large data sets from websites that are heavily protected from scrapers as it allows bypassing mechanisms in place to detect suspicious behavior associated with scraping activities. Proxies are placed between the user’s computer and the target websites which makes it possible for the user to evade detection as well as access restricted materials.

Also, password fields for authentication are stored in Append, allowing users to get data located on the content protected by the password. This increases the effectiveness of the tool for scraping the content of the wider range of types where the target data is required to be confidential or even private. The site is said to possess advanced features that can identify and solve captcha automatically, thus allowing a smooth flow of information while undertaking scraping activities. This ensures there are no delays when there are CAPTCHA challenges, allowing the workflow of data extraction to be efficient.

ParseHub enables users to automate and control data scraping activities using codes. This feature improves the ability to combine the internal data with other programs and workflows particularly useful for developers and businesses that require a lot of dynamic data integration to be performed. Besides, the system has the capability of working with webhooks which notify users actively whenever a task has been completed or has been updated so that they can respond to or change the scraping in real time.

Users can also schedule scraping tasks to run at specific intervals, increasing the efficiency of the operations. Further, ParseHub brings out the functionality of data preprocessing, cleanliness, and formatting of the data which can be saved into storage solutions like cloud, physical monitors, and through the use of APIs

ParseHub is a freemium web scraping service, offering both free and premium subscription plans. The free version is solid and well-suited for most small projects since it does not incur any costs; all users need to do is register on the platform.

This free plan allows access to 5 public projects. Users can process up to 200 pages every 40 minutes, with a maximum of 200 pages per run. When the boundary is hit, the tasks go into a queue and get done later, after the refresh. Under this plan, the data collected will be kept for only two weeks.

The “Standard” plan is available for $189 per month. It allows for enhancement of the parameters, such that users can scrape data from 200 web pages every 10 minutes and can accumulate up to 10,000 web pages for each session. Data remains accessible for 14 days. This plan also includes 20 public projects worth of pages, the ability to export to Dropbox or Amazon S3, rotate the IP address, and schedule tasks.

The “Professional” subscription costs $599 per month. It allows users to scrape a maximum of 200 pages every 2 minutes. There is no limit to the number of pages that can be scraped during a session. Users are allowed to handle up to 120 private projects and they have access to all the features included in the Standard option.

Users who need specialized solutions may opt for ParseHub Plus, which has a different price structure. The services included in this plan comprise professional data collection, establishment of one-off or bimonthly or regular activities, priority treatment and availability of a personal manager, and setting customized solutions to match the needs of clients.

| Plan | Everyone | Standard | Professional | ParseHub Plus |

|---|---|---|---|---|

| Price | Free | $189 per month | $599 per month | Custom |

| Processing time for 200 Pages | 40 minutes | 10 minutes | 2 minutes | Unlimited |

| Number of pages per run | 200 pages | 10,000 pages | Unlimited | Unlimited |

| Number of public projects | 5 projects | 20 projects | 120 projects | Unlimited |

| Data retention period | 14 days | 14 days | 30 days | Custom |

| Ability to save files in Dropbox S3 | – | + | + | + |

| IP rotation | – | + | + | + |

| Project scheduling | – | + | + | + |

| Priority support | – |

– |

+ | + |

When a user subscribes to any plan for a period of three months or more, a 15% discount is provided automatically. Plans that fit the individual’s needs can be organized by contacting ParseHub directly as well.

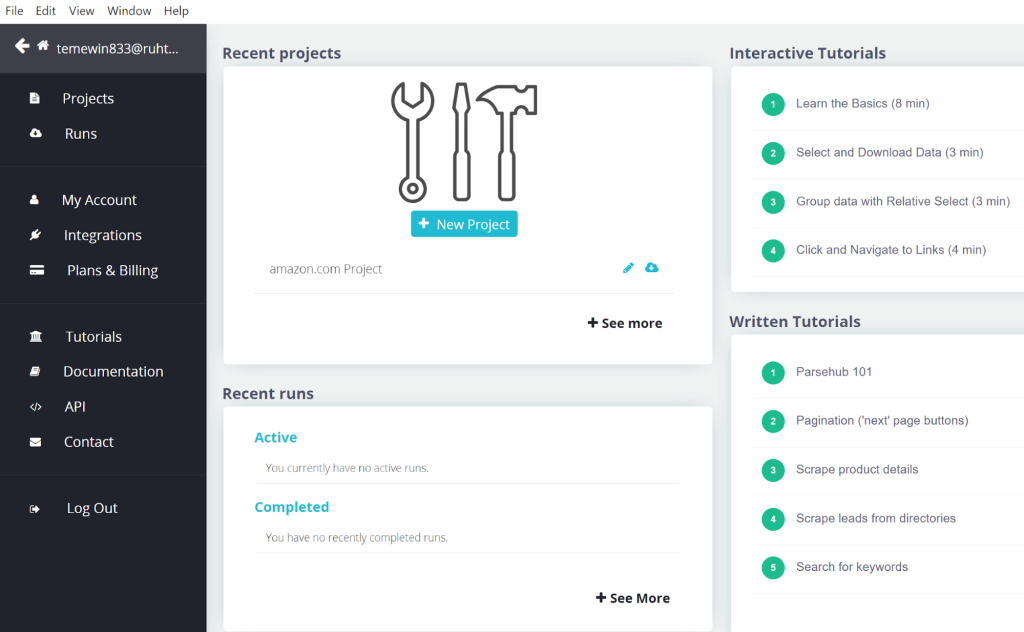

The ParseHub interface is designed for ease of use, featuring a straightforward layout. The left panel features main functions for quick access to other sections and configuration. The right workspace is where the user creates and manages projects, configures data scraping algorithms, and starts the scraping. The interface could also be zoomed in or out with the Ctrl key and either “+” or “-” for better viewing options.

The main control panel of ParseHub provides easy access to frequently used features and sections such as “Recent projects” and “Recent runs”. In addition, new users are able to familiarize themselves with the platform via guides provided by it.

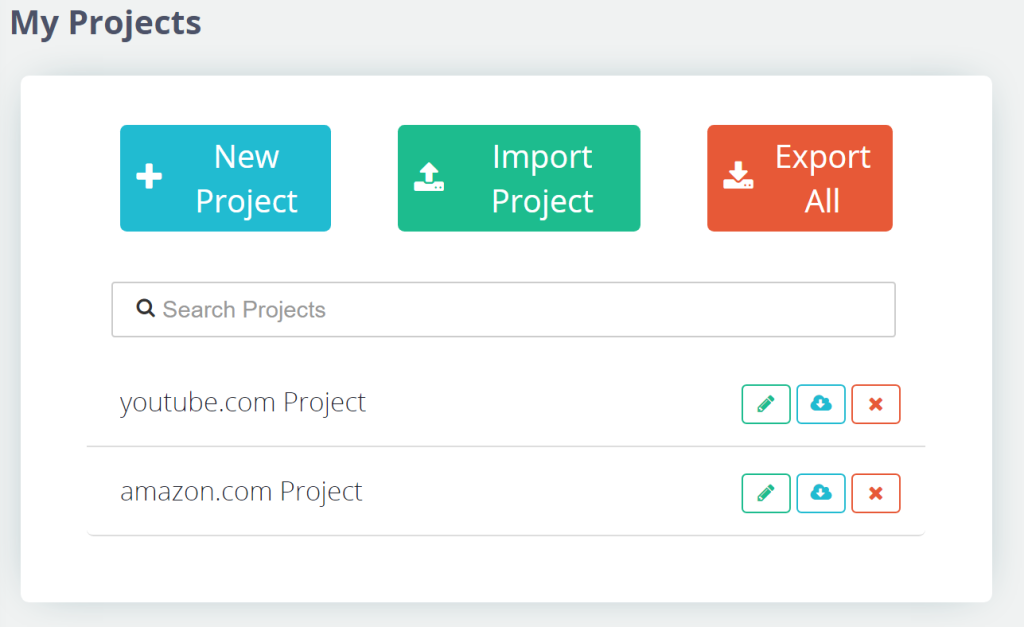

Any new project creation and scraping can be done by users in the “Projects” section by clicking on the button as well as managing the set of created projects. This part provides employees with concrete data on the current state of all projects and their progress, enabling full control over the scraping process.

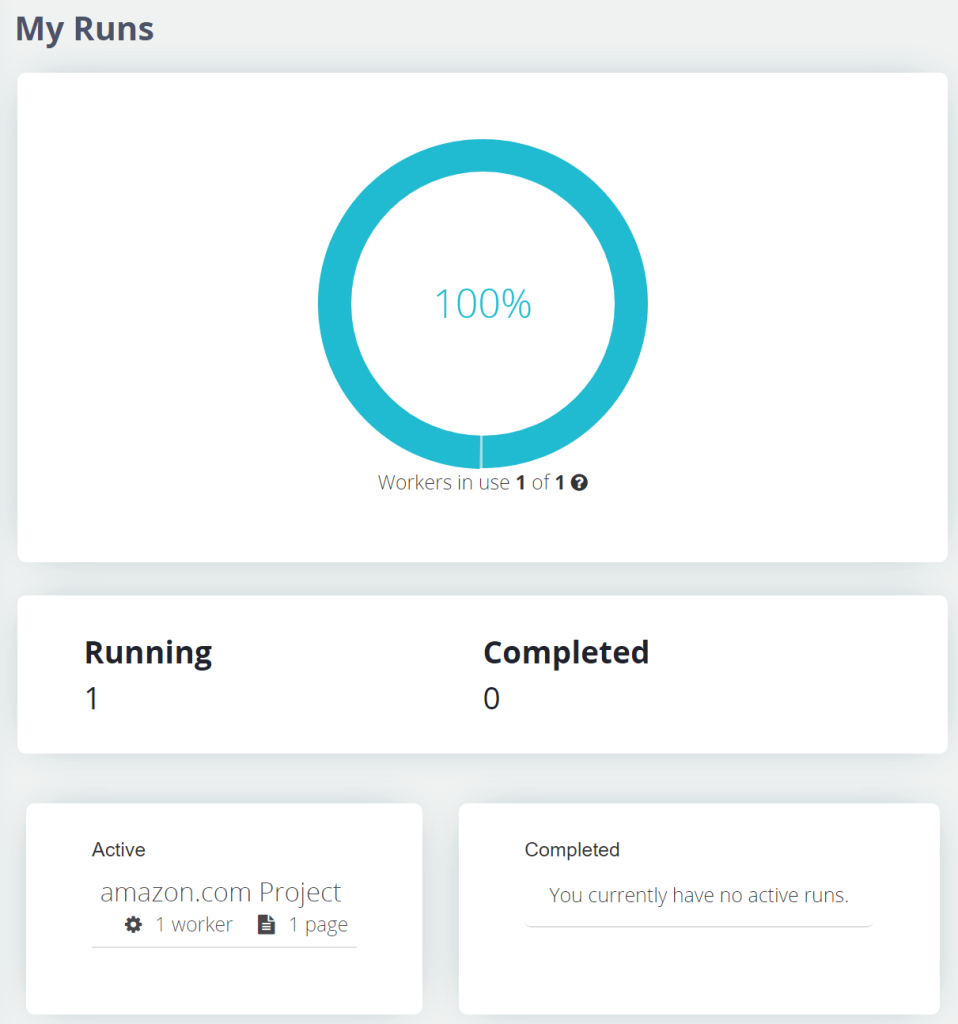

The “Runs” section contains detailed projection statistics as well as the activity statuses that include information related to the launched projects, active ones, and completed projects. This enables users to manage their web scraping projects effectively and efficiently.

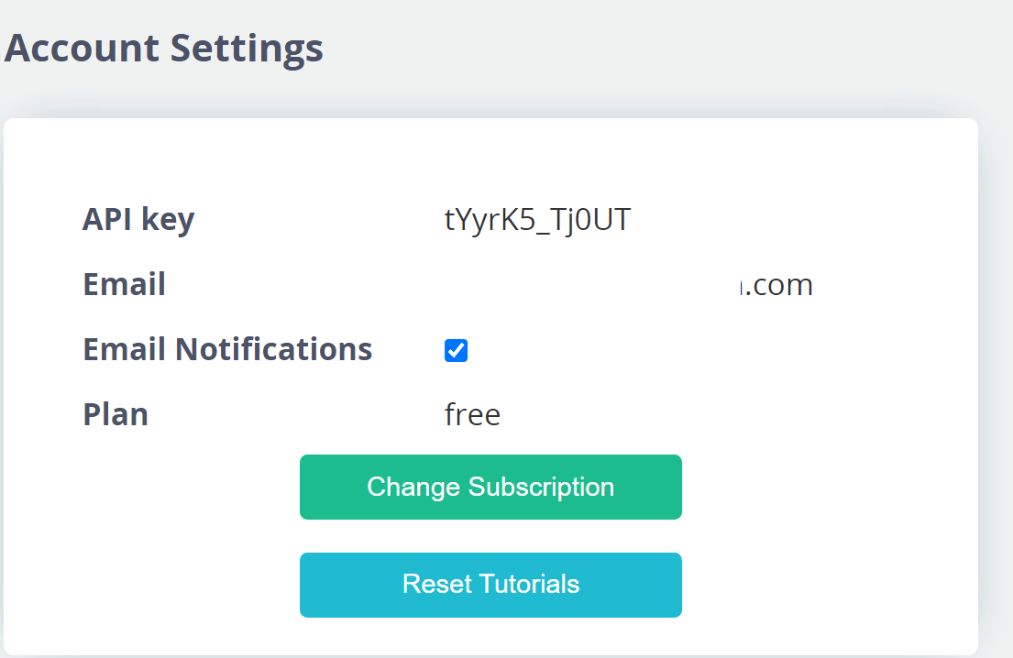

In this section, “My Account,” users can manage their plan attributes including API key and email address in addition to subscription information. This section also provides users with additional features such as upgrade options and instructions.

In the section labeled “Integrations”, users may monitor and check information about services provided by ParseHub’s integration with Dropbox and AWS S3 among others which widens the platform’s data management resources.

The section “Plans & Billing”, directs users towards the webpage containing the details of their existing plan together with the history of all payments made, where one can also make the payment.

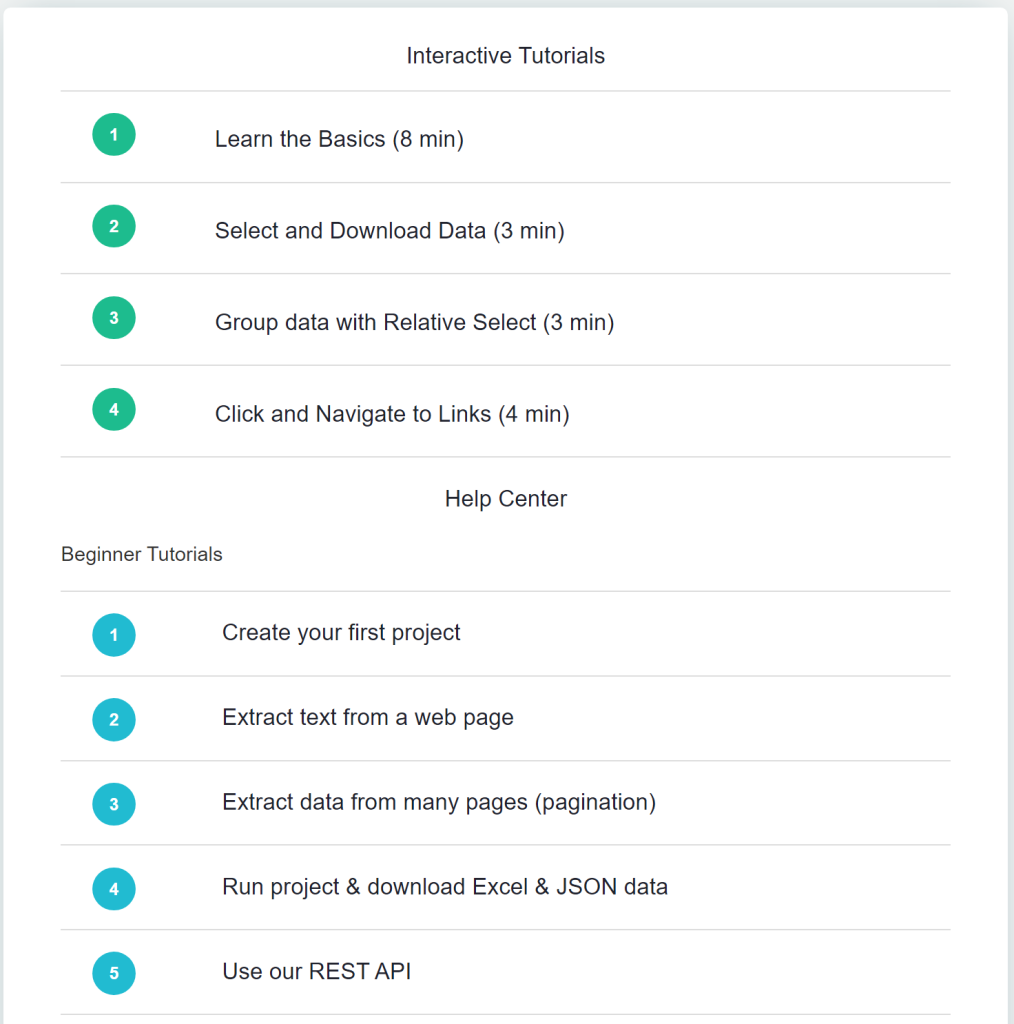

The “Tutorials” section provides a comprehensive collection of learning materials, including step-by-step guides, video tutorials, API documentation, FAQs, and troubleshooting tips to help users maximize their use of ParseHub.

The section “Documentations” acts as a getaway to the Help Center which encompasses a lot of information regarding various web scraping methods and additional ways of how to fully optimize ParseHub for your needs.

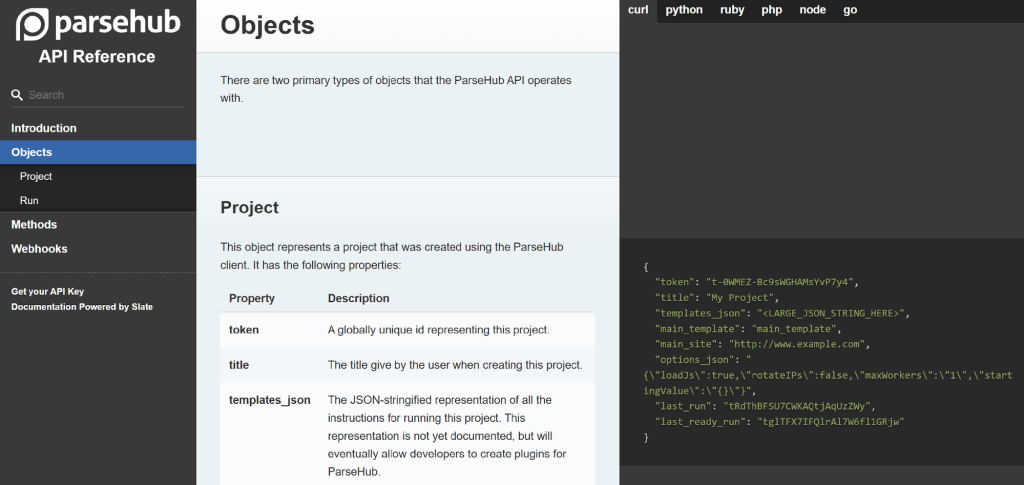

The “API” section includes comprehensive instructions and sample codes in Curl, Python, Ruby, PHP, Node.js, and Go which allow integration with ParseHub API for advanced users easily.

Finally, the “Contact” section enables the user to reach the support team of ParseHub and assist in problem resolution.

Using proxy servers with ParseHub offers several advantages that enhance the efficiency and safety of data collection:

Incorporating proxy servers into ParseHub setups, therefore, significantly enhances the tool's capability to gather data seamlessly and securely, ensuring uninterrupted access and reducing the likelihood of detection.

This article outlines the process for configuring proxy servers in ParseHub, emphasizing the importance of choosing the right type of proxy for effective data collection:

For professional scraping operations, it is emphasized that free proxy services are not suitable, due to being oversaturated and having the highest probability of being detected and completely blocked resulting in a security threat. Instead, spending on paid proxies will guarantee high efficiency and security for web scraping services.